August 02, 2022

In the fingerprint community, erroneous exclusions and inconclusive decisions are far more prevalent than erroneous identifications but they get far less attention. This has led me to examine observed rates of erroneous exclusions and inconclusive decisions and to highlight the opportunity they represent to increase identification rates.

I looked at two landmark papers by Ulery et al from 2011 [1] and Pacheco et al from 2014 [2]. Both papers were large scale studies of the accuracy and reliability of fingerprint examiners’ decisions. The Ulery et al study [1] involved 169 examiners, and the Pacheco et al study [2] 109 examiners. Other details are in Table 1 below.

Table 1 Scale of Research – Ulery et al [1] and Pacheco et al [2]

| Ulery et al 2011 [1] | Pacheco et al 2014 [2] | |

| Latents of Value for ID | 10,051 | 4,536 |

| Mated Fingerprint Pairs | 5,969 | 3,198 |

| Non-mated Fingerprint Pairs | 4,082 | 1,398 |

| Number of Examiners | 169 | 109 |

The examiners’ decisions shown in the results of Table 2 were not verified i.e they were not examined and supported by a second fingerprint examiner. While Pacheco et al [2] did look at verification, their study focused primarily on verification of identification decisions rather than exclusions or inconclusive decisions. The results of both studies shown in Table 2 indicated the same rate of erroneous exclusions, which accounted for 7.5% of all matching fingerprint pairs that were examined. This suggests that the actual occurrence of erroneous exclusions in casework could be as high as 7.5% of all matching pairs.

The rate of inconclusive decisions that were made when in fact the matching print was present was even higher in both studies: 31.1% of all matching pairs in Ulery et al [1] and 14.2% in Pacheco et al [2]. This suggests that the total rate of undetected identifications could range from 21.7 % (based on Pacheco et al [2]) to 38.6% (based on Ulery et al [1]). This would appear to leave much room for increasing the rate of identifications.

Table 2 Results– Ulery et al [1] and Pacheco et al [2]

| Ulery | Pacheco | |

| Erroneous Exclusion Rate | 7.5% | 7.5% |

| Inconclusive Decision (Matching print present) | 31.1% | 14.2% |

| Total Undetected IDs | 38.6% | 21.7% |

So what can be done to avoid erroneous exclusions and, where appropriate, make more correct identifications rather than inconclusive decisions? An obvious improvement would be to apply a verification protocol to all examiner decisions. Many agencies require all identification decisions to be verified, however most do not verify exclusion and inconclusive decisions. The reason for this is that 100% verification would in effect double the casework, particularly if blind verification is performed.

The recent paper by Marcus Montooth [3] provides an excellent insight into the application of a thorough quality control protocol to fingerprint examination. Over a three-year period from 2013 to 2015 all casework decisions of ten examiners at the Indiana State Police were subjected to 100% technical review prior to cases being released. All identifications and exclusions were also verified prior to review. The verification process was not blind. Because this was a study of casework, ground truth was not known.

Over the three years, the verification process yielded 14 identifications (0.5% of all identifications) being changed to inconclusive decisions and 9 exclusions (2% of all exclusions) being changed to inconclusive. No erroneous identifications or exclusions were detected. Inconclusive decisions were not verified. It is significant in Table 3 that inconclusive decisions accounted for 60% of the latent print comparisons. This means that if inconclusive decisions were to be verified, the labour involved in verification would roughly triple.

Table 3 Scale of Research – Montooth [3]

| Montooth 2019 [3] | |

| Latents of Value | 7903 |

| Verified Identifications | 2682 (33.9%)) |

| Verified Exclusions | 422 (5.3%) |

| Inconclusive | 4799 (60.8%) |

| Cases | 1902 |

| *Verification step did result in changing 14 ID’s & 9 exclusions to inconclusive | |

A technical review was applied to all latent prints of value from casework during the Montooth study [3], including identifications, exclusions and inconclusive decisions.

This consisted of reviewing the case notes, ensuring proper procedures were followed and a review of all lifts, images, latents and conclusions. The results are shown in Table 4. No erroneous identifications or erroneous exclusions were detected. Forty-five inconclusive decisions were changed to identifications, or 1.7% of the total identifications. In addition, 11 latents initially judged to be “not of value” were changed to “of value” and subsequently identified during review, representing 0.4% of the total identifications. The review process, therefore, resulted in a total of 56 additional identifications, or 2.1% of the total identifications. Based on these results, the technical review process was effective in detecting missed identifications. Since the study was based on casework and not groundtruthed data, it is unknown whether any identifications were missed in casework and not detected during verification or review.

Table 4 Results – Montooth [3]

| Erroneous Exclusions Detected | none |

| Erroneous Identifications Detected | none |

| Inconclusive Decisions changed to ID (Matching print present) | 45 (1.7% of all IDs) |

| Latent not of value changed to “of value” and ID’ed | 11 ( 0.4% of all IDs) |

If the results observed by Ulery et al [1] and Pacheco et al [2] are generally representative of fingerprint examination, some identifications are probably not detected by examiners even with verification. While some matching pairs undoubtedly are such that an identification decision is impossible due to distortion, a lack of features, poor known print quality and various other factors, there may be an opportunity for the use of software-assisted matching to help examiners to catch some proportion of identifications which might otherwise go undetected.

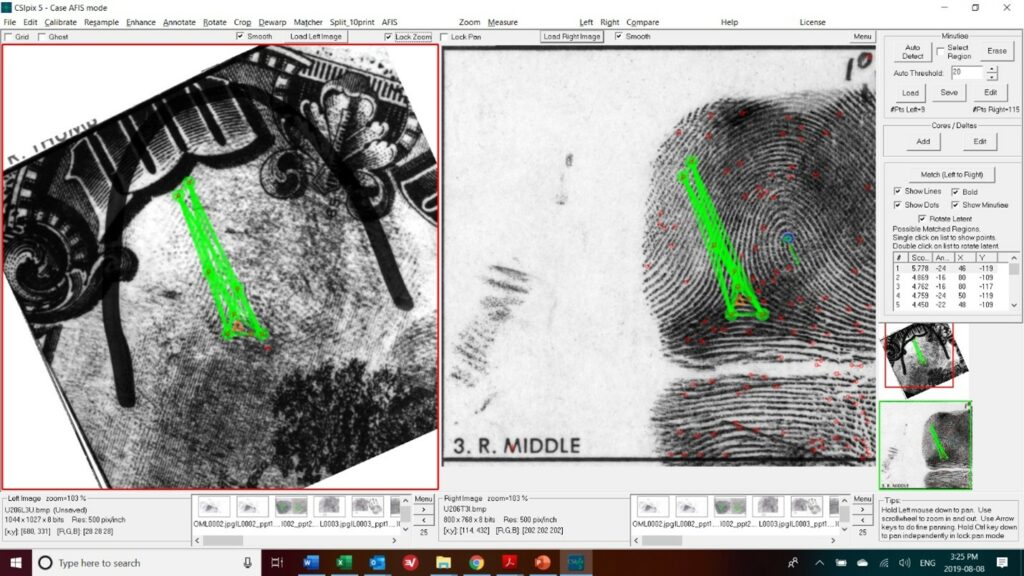

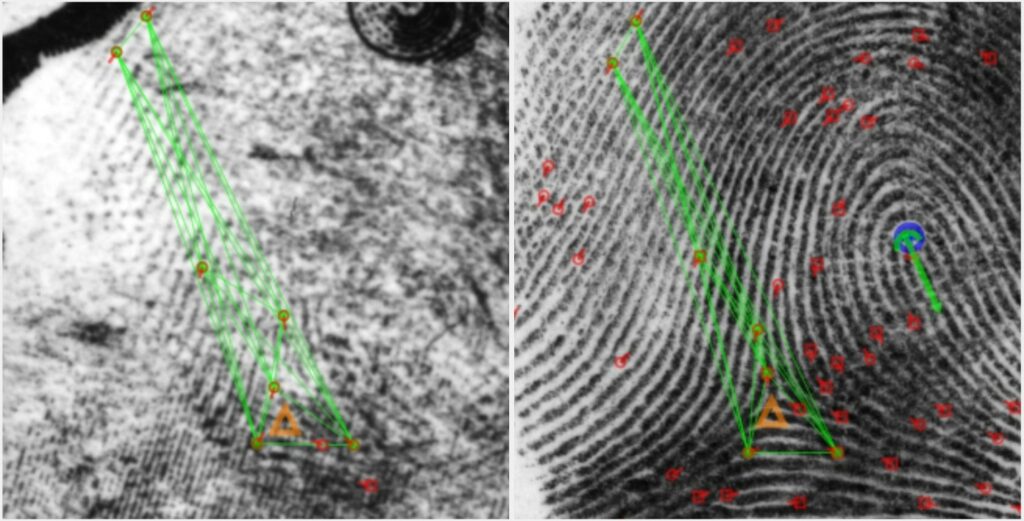

Fingerprint matching software can perform an automated search of the minutia pattern (and other fingerprint data) on a latent print against all the minutiae (and corresponding fingerprint data) on a known print and highlight regions on the known print that match (within a margin of error) the latent. The latent is rotated so that the matching regions are at the same orientation, which allows the fingerprint examiner to conduct further evaluation if warranted and make their decision (See Fig. 1 and Fig. 2 below).

A 2015 study by Langenburg et al [4] applied an early case AFIS software to archived casework data from the Minnesota Bureau of Criminal Apprehension. Langenburg et al [4] observed that the case AFIS search process found 7 instances out of 191 latent prints where an identification was made that was previously undetected in casework. On the other hand, the case AFIS missed 5 of the 49 identifications made by examiners in casework. The authors concluded that case AFIS technology, while not perfect, could be used as an additional tool to enhance and supplement traditional examination processes. I agree that software-assisted matching is simply a tool to help fingerprint examiners determine where to look for potential matches; the actual evaluation decision must of course be made by the examiner. I would also note that matching algorithms are now more effective compared to 2015.

Figure 1

Figure 2

Some agencies are currently using software-assisted matching as a quality control measure. In other words, after an examiner completes the ACE or ACE-V process, prior to finalizing an inconclusive or exclusion decision, the matching software is used to try to find potential matching regions between the two friction ridge images being examined. Anecdotal evidence from agencies using software-assisted matching indicates that software matching does sometimes help catch identifications missed in the initial manual analysis. More quantitative work in this area is needed and would be welcome.

References

[1] Ulery, B.T.; Hicklin, R.A.; Buscaglia, J.; Roberts, M.A. Accuracy and Reliability of Forensic Latent Fingerprint Decisions. Proc. Natl. Acad. Sci. 2011, 108 (19) 7733–7738.

[2] Pacheco, I.; Cerchiai, B.; Stoiloff, S. Miami-Dade Research Study for the Reliability of the ACE-V Process: Accuracy & Precision in Latent Fingerprint Examinations. NCJRS Doc. No. 248534, Dec. 2014.

[3] Montooth, M.S. Errors in Latent Print Casework Found in Technical Reviews. J. For. Ident. 2019, 69 (2), 125-140.

[4] Langenburg, G.; Hall, C.; Rosemarie, Q. Utilizing AFIS Searching Tools to Reduce Errors in Fingerprint Casework. For. Sci. Int. 2015, 257 123-133.

John Guzzwell

M.Sc., MBA, P.Eng

Over 30 years of experience in technology development and commercialization, with a focus on software for forensic image enhancement, comparison, and identification.

Member of the IAI and co-founder and VP of Business Development with CSIpix.

Sign up to receive CSIpix blogs, updates and industry insights.